Selected Projects

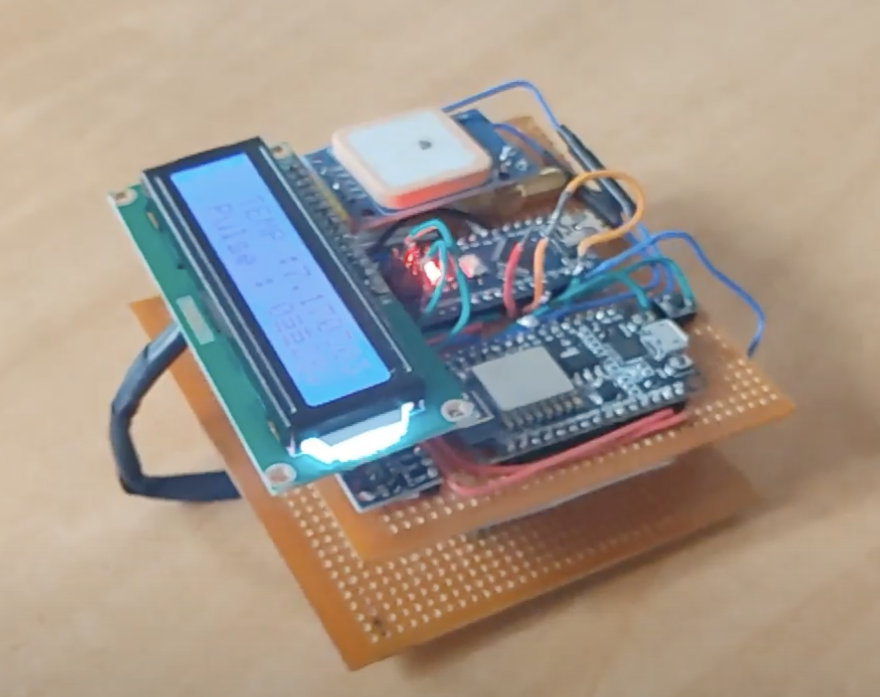

SeEar

A low-cost AR device with localized real-time captions tailored to enhance the educational experience of Deaf and Hard-of-Hearing (DHH) students.

AiSee

AI-powered companion for people with visual impairments to independently access visual information.

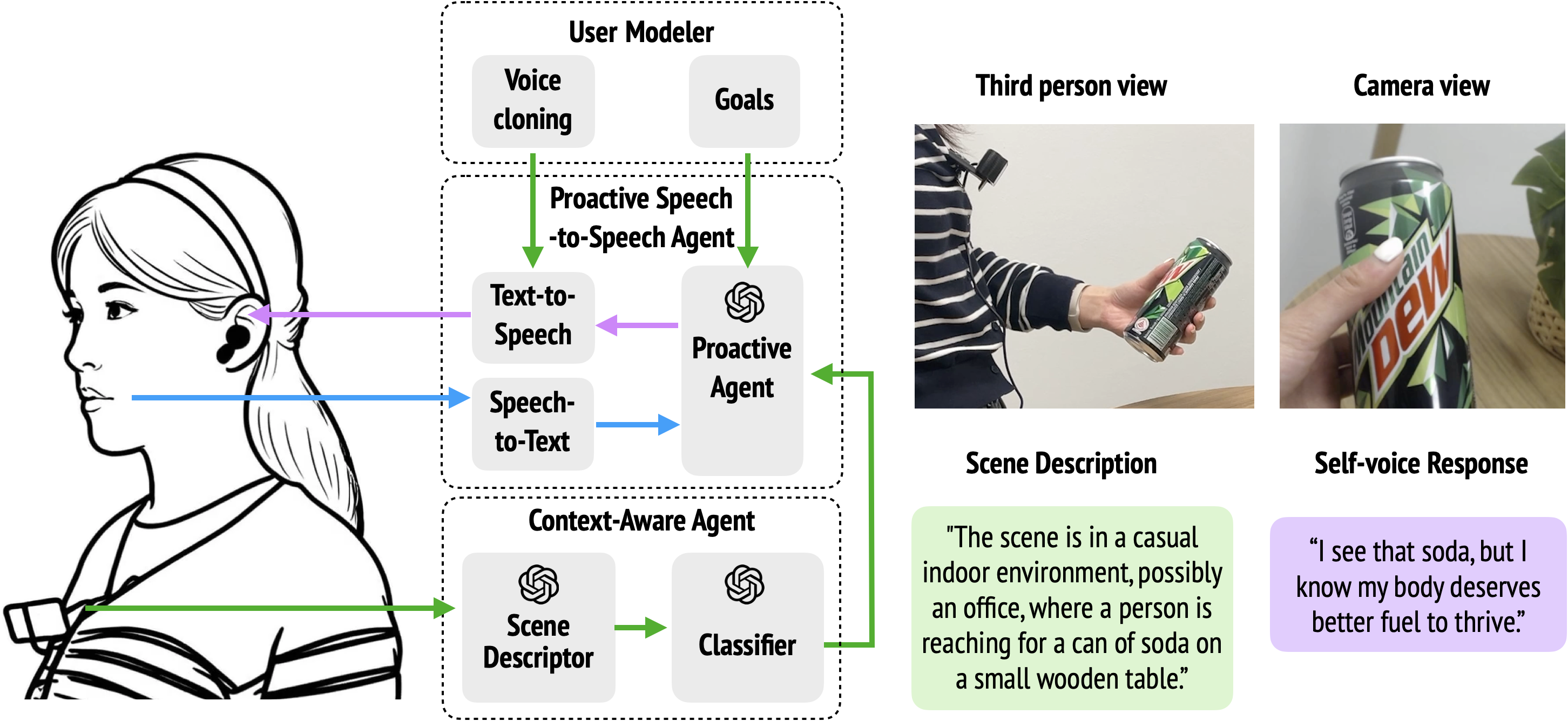

Mirai

Wearable AI system with an integrated camera, real-time speech processing, and personalized voice-cloning to provide proactive and contextual nudges for positive behavior change.

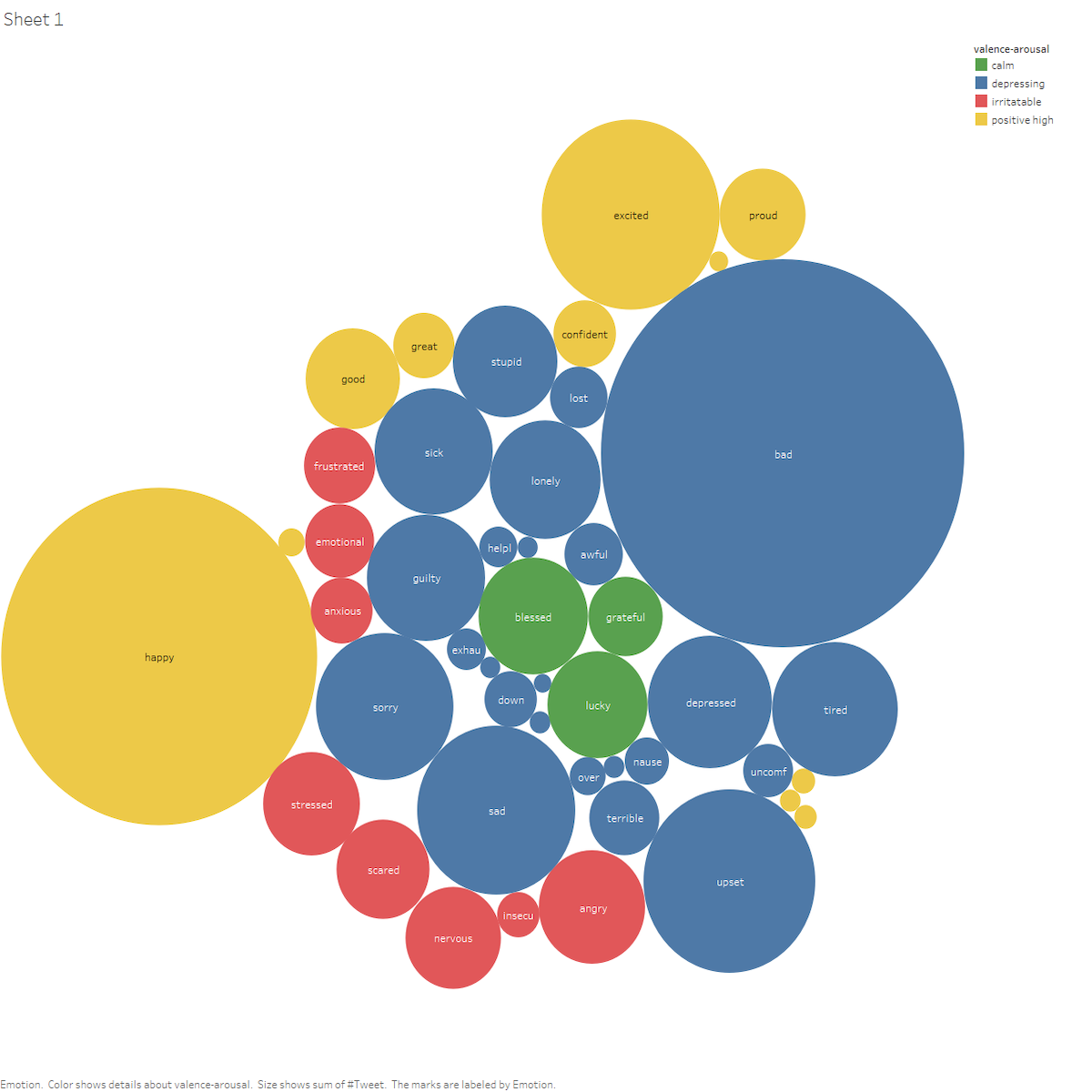

Hatthini

Conversational companion for emotional regulation, using AI to assist users in managing emotions effectively.

Kavy

Conversational AI agent designed to foster language-speaking skills and build self-confidence.

ICOAE

Wristband and mobile app for health monitoring and emergency handling, designed for elderly individuals.

SpeechAssist

Mobile app designed to support advanced stammering treatments such as Delayed Auditory Feedback (DAF).