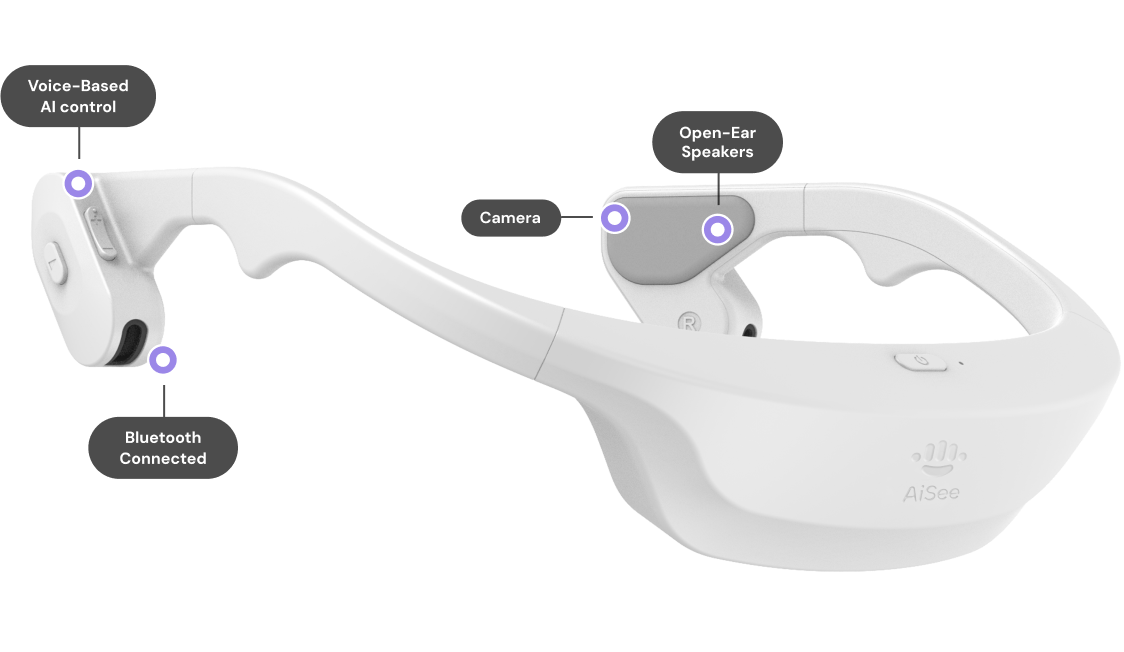

AiSee

Smart Wearable for People with Visual Impairments (PVI) to seamlessly access visual information.

Overview

World's first visual conversational AI assistant designed for and with people who are blind or visually impaired.

Role

- Development of firmware and software for an android wearable.

- Integrated advanced speech and image processing algorithms.

- Achieved 3x faster response rate and higher accuracy than leading products.

- Created the Multimodal AI Agentic architecture for seamless interaction with visual language models.

- Currently building a visual memory layer to enhance proactive memory recall.

Key Findings

- Currently, leading a study with 5 visually impaired individuals, evaluating trust, satisfaction, and system accuracy.